Natural Language Processing and Deep Learning have the potential to overhaul patent operations for large patent departments. Jobs that used to cost hundreds of dollars / pounds per hour may cost cents / pence. This post looks at where I would be investing research funds.

The Path to Automation

In law, the path to automation is typically as follows:

Qualified Legal Professional > Associate > Paralegal > Outsourcing > Automation

Work is standardised and commoditised as we move down the chain. Today we will be looking at the last stage in the chain: automation.

Potential Applications

At a high level, here are some potential applications of deep learning models that have been trained on a large body of patent publications:

- Invention Disclosure > Patent Specification +/ Claims (Drafting)

- Patent Claims + Citation > Amended Claims (Amendment)

- Patent Claims > Corpus > Citations (Patent Search)

- Invention Disclosure > Citations (Patent Search)

- Patent Specification + Claims > Cleaned Patent Specification + Claims (Proof Reading)

- Figures > Patent Description (Drafting)

- Claims > Figures +/ Patent Description (Drafting)

- Product Description (e.g. Manual / Website) > Citation (Infringement)

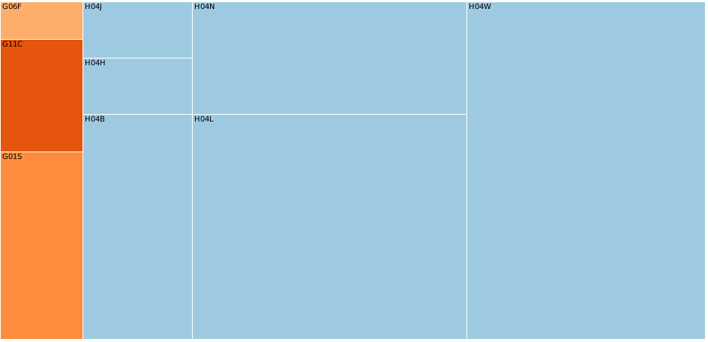

- Group of Patent Documents > Summary Clusters (Text or Image) (Landscaping)

- Official Communication > Response Letter Text (Prosecution)

Caveat

I know there is a lot of hype out there and I don’t particularly want to be responsible for pouring oil on the flames of ignorance. I have tried to base these thoughts on widely reviewed research papers. The aim is to provide more a piece of informed science fiction and to act as a guide as to what may be. (I did originally call it “Your Patent Department 2020” :).

Many of these things discussed below are still a long way off, and will require a lot of hard work. However, the same was said 10 years ago of many amazing technologies we now have in production (such as facial tagging, machine translation, virtual assistants, etc.).

Examples

Let’s dive into some examples.

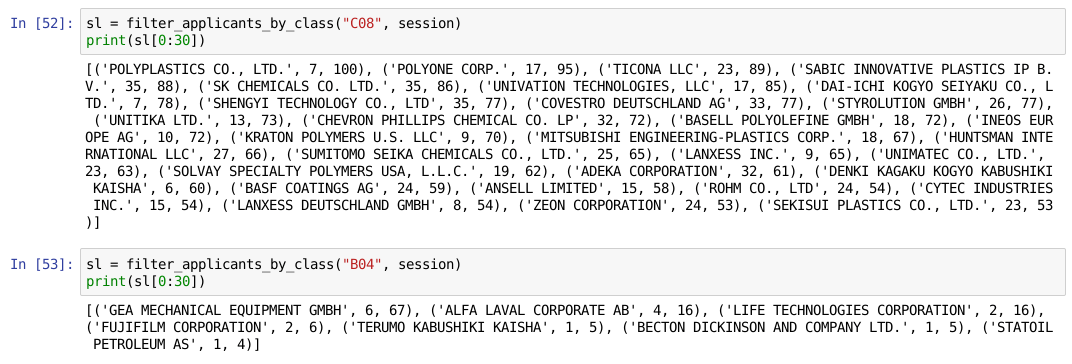

Search

At the moment, patent drafting typically starts as follows: receive invention disclosure, commission search (in-house or external), receive search results, review by attorney, commission patent draft. This can take weeks.

Instead, imagine a world where your inventors submit an invention disclosure and within minutes or hours you receive a report that tells you the most relevant existing patent publication, highlights potentially novel and inventive features and tells you whether you should proceed with drafting or not.

The techniques already exist to do this. You can download all US patent publications onto a hard disk that costs $75. You can convert high-dimensionality documents into lower-dimensionality real vectors (see https://radimrehurek.com/gensim/wiki.html or https://explosion.ai/blog/deep-learning-formula-nlp). You can then compute distance metrics between your decomposed invention disclosure and the corpus of US patent publications. Results can be ranked. You can use a Long Short Term Memory (LSTM) decoder (see https://www.tensorflow.org/tutorials/seq2seq) on any difference vector to indicate novel and possibly inventive features. A neural network classifier trained on previous drafting decisions can provide a probability of proceeding based on the difference results.

Drafting

A draft patent application in a complicated field such as computing or electronics may take a qualified patent attorney 20 hours to complete (including iterations with inventors). This process can take 4-6 weeks.

Now imagine a world where you can generate draft independent claims from your invention disclosure and cited prior art at the click of a button. This is not pie-in-the-sky science fiction. State of the art systems that combine natural language processing, reinforcement learning and deep learning can already generate fairly fluid document summaries (see https://metamind.io/research/your-tldr-by-an-ai-a-deep-reinforced-model-for-abstractive-summarization). Seeding a summary based on located prior art, and the difference vector discussed above, would generate a short set of text with similar language to that art. Even if the process wasn’t able to generate a perfect claim off the bat, it could provide a rough first draft to an attorney who could quickly iterate a much improved version. The system could learn from this iteration (https://deepmind.com/blog/learning-through-human-feedback/) allowing it to improve over time.

Or another option: how about your patent figures are generated automatically based on your patent claims and then your detailed description is generated automatically based on your figures and the invention disclosure? Prototype systems already exist that perform both tasks (see https://arxiv.org/pdf/1605.05396.pdf and http://cs.stanford.edu/people/karpathy/deepimagesent/).

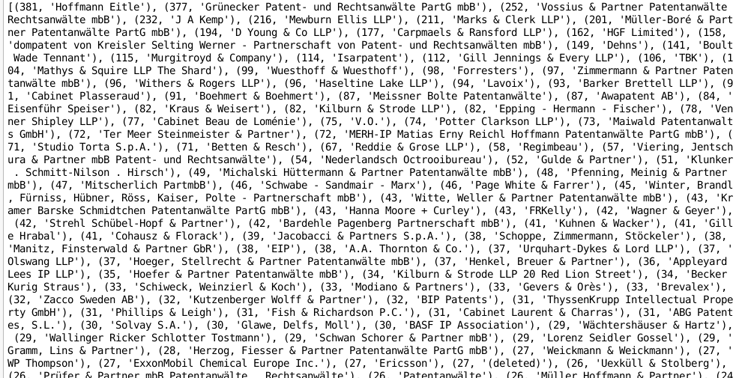

Prosecution

In the old days, patent prosecution involved receiving a letter from the patent office and a bundle of printed citations. These would be processed, stamped, filed, carried around on an internal mail wagon and placed on a desk. More letters would be written culminating in, say, a written response and a set of amendments.

From this, imagine that your patent office post is received electronically, then automatically filed and docketed. Citations are also automatically retrieved and filed. Objection categories are extracted automatically from the text of the office action and the office action is categorised with a percentage indicating the chance of obtaining a granted patent. Additionally, the text of the citations is read and a score is generated indicating whether the citations remove novelty from your current claims (this is similar to the search process described above, only this time you know what documents you are comparing). If the score is lower than a given threshold, a set of amendment options are presented, along with a percentage chances of success. You select an option, maybe iterate the amendment, and then the system generates your response letter. This includes inserting details of the office action you are replying to (specifically addressing each objection that is raised), automatically generating passages indicating basis in the text of your application, explains the novel features, generates a problem-solution that has a basis in the text of your application, and provides pointers for why the novel features are not obvious. Again you iterate then file online.

Parts of this are already in place at major law firms (e.g. electronically filing and docketing). I have played with systems that can extract the text from an office action PDF and automatically retrieve and file documents via our document management application programming interface. With a set of labelled training data, it is easy to build an objection classification system that takes as input a simple bag of words. Companies such as Lex Machina (see https://lexmachina.com/) already crunch legal data to provide chances of litigation success; parsing legal data from say the USPTO and EPO would enable you to build a classification system that maps the full text of your application, and bibliographic data, to a chance of prosecution success based on historic trends (e.g. in your field since the 1970s). Vector-space representations of documents allow distance measures in n-dimensional space to be calculated, and decoder systems can translate these into the language of your specification. The lecture here explains how to create a question answering system using natural language processing and deep learning (http://media.podcasts.ox.ac.uk/comlab/deep_learning_NLP/2017-01_deep_NLP_11_question_answering.mp4). You could adapt this to generate technical problems based on document text, where the answer is bound to the vector-space distance metric. Indeed, patent claim space is relatively restricted (it is, at heart, a long sentence, where amendments are often additional sub-phrases of the sentence that are consistent with the language of the claimset); the nature of patent prosecution and added subject matter, naturally produces a closed-form style problem.

Imagining Reality is the First Stage to Getting There

There is no doubt that some of these scenarios will be devilishly hard to implement. It took nearly two decades to go from paper to properly online filing systems. However, prototypes of some of these solutions could be hacked up in a few months using existing technology. The low hanging fruit alone offers the potential to shave hundreds of thousands of dollars from patent prosecution budgets.

I also hope that others are aiming to get there too. If you are please get in touch!