Large Language Models (LLMs) and other Machine Learning (ML) approaches have made huge progress over the last 5-6 years. They are leading to existential questioning in professions that pride themselves on a mastery of language. This includes the field of patent law.

When new technologies arrive, they also allow us a different perspective. Let’s look.

- What is a patent?

- How do computers “see” claims?

- Comparing claims using computers

- Settlers in a Brave New World

What is a patent?

At its heart, a patent is a description of a thing or a process.

It is made up primarily of two portions:

- claims – these define the scope of legal protection.

- description and figures – these provide the detailed background that supports and explains the features of the claims.

Claims

These are a set of numbered paragraphs. Each claim is a single sentence. A claimset is typically arranged in a hierarchy:

- independent claims

- These claims stand alone and do not refer to other claims.

- They represent the broadest scope of protection.

- They come in different types representing different types of protection. These relate to different infringing acts.

- dependent claims

- These claims refer to one or more other claims.

- They ultimately depend on one of the other independent claims.

- They offer additional limitations that act as fallback positions – if an independent claim is found to lack novelty or an inventive step, a combination of that same independent claim and one or more dependent claims may be found to provide novelty and an inventive step.

Why claims?

An independent claim seeks to provide a specification of a thing or a process so that a legal authority can decide whether an act infringes upon the claim. This typically means that another thing or process is deemed to fall within the specification of the thing or process in the claim.

Patents arose from legal decrees on monopolies. They started to become a legal concept in the 15th and 16th centuries. At first, the legal authority was a monarch or guild. So you can think of them as an attempt 500-odd years ago to describe a thing or process for some form of human negotiation.

A key point is that claims are inherently linguistic. The specification of a thing or a process is provided in a written form, in whatever language is used by the patent jurisdiction in question. So we are using words to specify a thing or a process in a way that allows for comparison with other things or processes.

Normally we want the specification to be as broad as possible – to cover as many different things or processes as possible so as to maximise a monopoly. But there is a tension with the requirements that a claim be novel and inventive (non-obvious). There is a dialectic process (examination) that refines the language. I want a monopoly for “a thing” (“1. A thing.”) but there are pre-existing “things” that are a problem for novelty.

So claims are not only compared with other things and processes when determining infringement, there are also compared with things and processes that were somehow available to the public prior to the filing of a patent application containing the claims.

Description and Figures

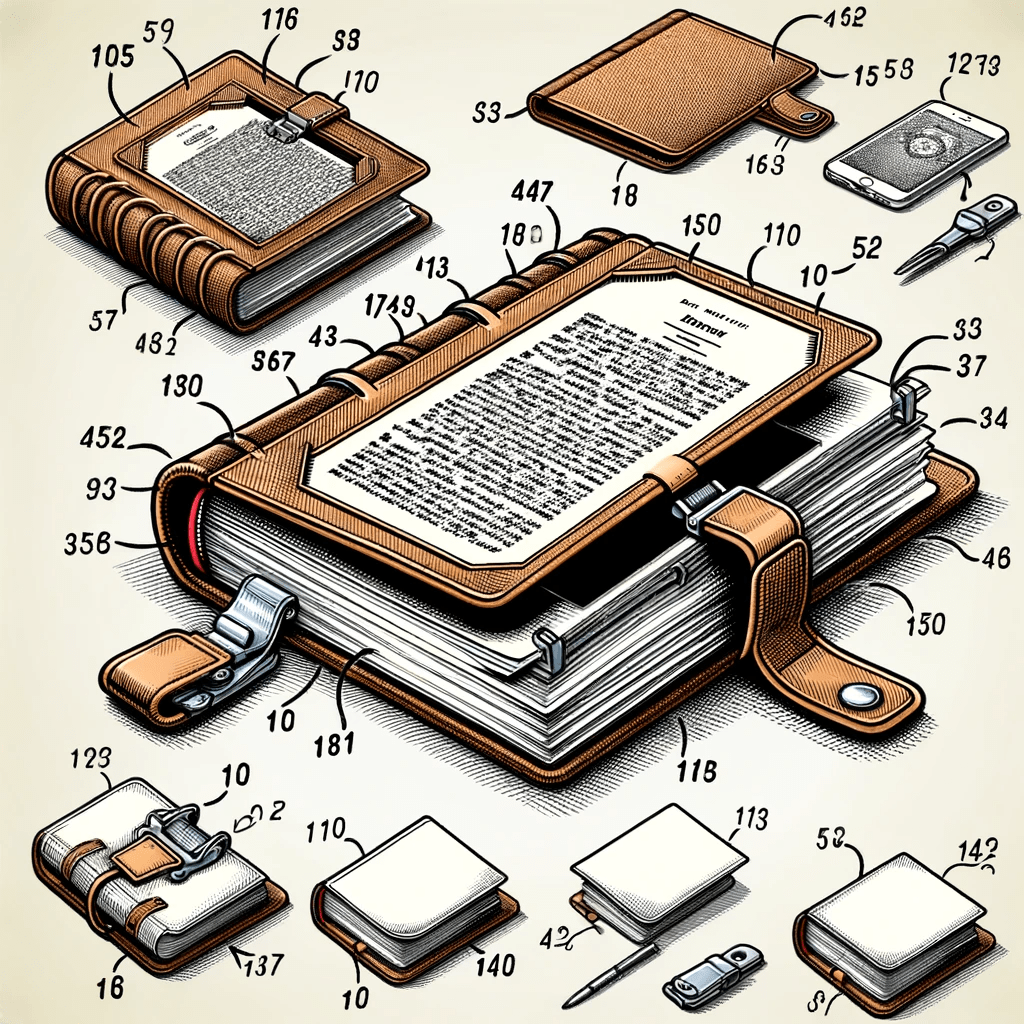

In a patent application there is also a written description and normally one or more figures. These are “extras” that help understanding and building up a context for any comparison of the claims.

If we are examining claims for novelty and inventive step, we are often comparing them with the description and figures of existing patent publications. This is because claims are typically more abstract than the written description, and the written description contains a lot more information. We are using the principle that the specific anticipates the general.

Figures are traditionally line diagrams. They started as engineering drawings and since extended to more abstract diagrams, like flowcharts for processes and system diagrams for complicated information technology equipment.

How do computers “see” claims?

A Very Brief History of Patent Information

If we want to help ourselves compare claims, either for infringement or examination, it would be good to automate some of the process. Computers are a good tool for this job.

Patent applications used to be handwritten (as were all documents). If copies were to be made, these would also be handwritten.

Later, they were printed using mechanical printing presses. The process for this used to be the arrangement of the letters and characters in a frame to form pages of text, which were then inked and pressed onto paper. Illustrations were typically originally hand-drawn, and then reproduced using etchings or lithography.

As typewriters became common in the 20th century, patent specifications were typed from handwritten versions or as a patent attorney dictated. When I started in the profession in 2005, there were still “secretaries” that typed up letters and patent specifications.

Computers came rather late to the patent profession. It was only in the 1990s they started entering into the office and it was only in the 21st century that word processors finally replaced physical type and paper.

We still refer to “patent publications” and there is a well-trodden legal process for publication. This was because it used to take a lot of work to publish a patent specification. This seems strange in an age when anyone can publish anything in seconds at the click of a button.

From Physical to Digital

Computers are actually closer to their analogue cousins than we normally realise.

At a basic level, a text document of a set of patent claims comprises a sequence of character encodings. Each character encoding is a sequence of bits (values of 0 and 1). A character is selected from a set that includes lower case letters, upper case letters, and numbers. Normally there is a big dictionary of numbers associated with each character. You can think of a character as anything that is either printed or controls the printing. In the past, characters would be printed by selecting a particular block with a carving or engraving of the two-dimensional visual pattern that represents the character. If you imagine a box of blocks, where each block is numbered, that’s pretty much how character encoding works in a computer.

For example, the patent claim – “1. A thing.” is 049 046 032 065 032 116 104 105 110 103 046 in a decimal representation of the ASCII encoding. This can then be converted into its binary equivalent, e.g. 00110001 00101110 00100000 01000001 00100000 01110100 01101000 01101001 01101110 01100111 00101110. In an actual character sequence, there is typically no delimiting character (“space” is still just a character), so what you have is 0011000100101110001000000100000100100000011101000110100001101001011011100110011100101110. What bits relate to which character is determined based on fixed-length partitioning.

Another hangover from mechanical printing and typing is that many of the control and spacing characters are digital versions old mechanical commands. For example, “carriage return” doesn’t really make sense inside a computer, a computer doesn’t have a carriage. However, a typewriter has a carriage that pings forwards and backwards. Similarly, the “tab” character is a short cut for those on typewriters having to type tables. Any actual text thus contains not only the references to the letters used to form the words, but also the control characters that dictate the whitespace and file structure.

A sequence of character encodings is typically referred to as a “string” (from the mental image of beads on a string). This may be stored or transmitted. Word processors store character encodings in a more complex digital wrapper. Microsoft Word rather silently shifted a decade ago from a proprietary wrapper to a more open extended mark-up (XML) format (which is why you have all those different options for saving Office files). A modern Word file is actually a zip file of XML files.

Things get more confusing when we consider the digital replacement for physical prints – PDF files. PDF files are different beasts from word processing files. They are concerned with defining the layout of elements within a displayed document. While both word processing documents and PDF files store strings of text somewhere underneath the wrapping, the wrapping is quite different.

What does this all mean for my claims?

It means that much of the linguistic structure we perceive in a written patent claim exists in our heads rather than in the digital medium.

The digital medium just stores sequences of character encodings. A digital representation of a patent claim does not even contain a machine representation of “words”.

This still confuses many people. They assume that “words” and even sometimes the semantic meaning exist “somewhere” in the computer. They assume that the computer has a concept of “words” and so can compute with “words”. This was false…until a few years ago.

Comparing claims using computers

Traditional Patent Searching

Patent searching can be thought of as a way of comparing a patent claim with a body of prior publication documents. You can see the limitations of traditional computer representations of text when you consider patent searching.

Most digital patent searching, at least that developed prior to 2020ish, is based on key word matching. This works because it does not need the computer to understand language. All it consists of is character sequence matching.

For example, if you are looking for a “thing”, you type in “thing”. This gets converted into a binary sequence of bits. The computer then searches through portions of encoded text looking for a matching binary sequence of bits. It’s a simple seek exercise. It’s also slow and fragile – “entity” or “widget” can pretty much have the same meaning but will not be located.

Now there are some tricks to speed up keyword matching on large documents. You can do a simple form of tokenisation by splitting character sequences on whitespace characters (e.g., a defined list of character encodings that define spaces, full stops, or line returns). These represent words 80-90% of the time but there are lots of issues (compare splitting on ” ” and “.” for “A thing.” and “This is 3.3 ml.”). The resulting character sequences following the split can then be counted. This is called “indexing” the text. This then has the power of reducing the text to a “bag of words” – the “index”. It turns out that lots of words are repeated used (e.g., “it”, “the”, “a” etc.). The bag of words, represented as a set of unique character sequences, thus has much fewer entries that the complete text. You can also ignore words that don’t help (called “stopwords”, they are normally chosen to exclude “a”, “the”, “there” and other high-frequency words). The “index” can thus be much more quickly searched for character sequence matches. (This ignores most of the very clever real world optimisations for keyword searching in large databases but is roughly still how things work so stay with me.)

Now, key word searching is only a rough proxy for a claim comparison.

If you try to search the complete character sequence of the claim against all the patents every published, it is very likely you will not find a match. This is because the longer the sequence of characters, the more unique it is. You would only find a match in Borge’s library. The Google PageRank claim is around 600 characters. You would need to find a string with 600 characters arranged identically. And you would not match against semantically identical descriptions in prior publications that just used a different punctuation character encoding somewhere amongst those 600 characters (don’t get me started on hyphens).

Multiple term key word searching typically involves picking multiple key words from the claim we wish to compare and doing a big AND query, looking for all those words to have matches with a body of text. Even more complex approaches such as “within 5” typically just perform a normal character match then look for another match in a subset of character encodings either side of the character match.

How Patent Attorneys Compare Claims

Patent attorneys learn their skill through repeatedly working with patents over many years, typically decades. It’s a rather unique and niche skill. But often it’s one that cannot be easily explained or formalised.

That’s why it’s always a useful exercise to image explaining what you do to a lay person. Your gran or a five-year old.

When I was first training as a patent attorney, coming from a science and engineering background, I did think there was a “right” way of comparing patent claims and that it was just a matter of learning this and applying it. For the law, you quickly realise that this isn’t how things work. Training typically consisted of working with a skilled qualified attorney and watching how they did things. And then seeking to rationalise those things into a general scheme. It’s much more of a dark art. After working with many different attorneys, you realise there is lots of stylistic variation. You realise the courts often have an intuitive feel for what is right, and this is used to guide a rationalised logic process within the bounds of previous attempts. The rationalised logic is what you end up with (the court report), while the intuitive feeling often hides in plain sight.

Anyway, claim comparison is typically split into the following process:

- Construe the claim

- Split the claim into features

- Match features

- Look at the number of matched features

If all the features match in a way that is agreed by everyone then the claim covers an infringement or the claim is anticipated by prior art.

Construe the claim

“Construing” a claim is shorthand for interpreting the terms within the claim. Typically, it concentrates on areas of the claim that may be unclear, or are open to different interpretations. For example, a claim could have a typo or error, or a term might have multiple meanings that cover different groups of things.

Construing the claim is typically performed early on as it allows multiple parties to have a consistent interpretation of the text. It is thus needed before any matching is performed. It is often presented as an exercise that is “independent” of the later stages of the comparison. However, in practice, construction is performed with an eye on the comparison – if the infringement or prior art revolves around whether a particular feature is present (e.g., does it have a “wheel”?) then the terms that describe that feature have greater weight when construing (e.g., what is a “wheel”?).

Claim construction is something that is hard to translate to an automated analysis. It involves having parties to a disagreement agree on a set of premises or context. It thus naturally involves mental models within the minds of multiple groups of people, people that have a vested interest in an interpretation one way or another.

Where there is disagreement, the description and figures are typically used as an information source for resolving error and ambiguity. For example, if the description and figures clearly state that “tracked propulsion” is “not a wheel”, then it would be hard for a party to argue that “wheel” covers “tracked propulsion”. Similarly, if the claim refers to a “winjet” and the description consistently describes a “widget”, then it seems clear “winjet” is a typo and what was meant was “widget”.

Claim construction can also be seen as making the implicit, explicit. Certain terms in a claim may be deemed to have a minimum number of properties or attributes. These may be based on the “common general knowledge” as represented by sources such as textbooks or dictionaries. These can be taken as a “base line” that are then modified by any explicit specification in the claim or description and figures. Again, if the parties agree that both objects of comparison have an X, there is little reason to go into this level of detail. It is mainly terms about which the comparison turns that undergo this analysis. These terms are typically those where there is the greatest difference between the parties and the strongest arguments. One of the roles of the courts or the patent examination bodies is to shape the argument so that points of agreement can be quickly be admitted, and the differing points number a reasonable amount. (If there are lots of differences, and many of these, on the face of it, are supported, it is difficult to bring a case or find agreement within the authority; if there are no differences that are contested, the case is typically easy to bring to summary judgement.)

When construing the claim, prior decisions of the courts can also be brought to bear. If a higher court rules that using the phrase “X” has a particular interpretation, this can be applied in the present case.

Split the Claim into Features

What are claim “features”?

Here we can go back to our original split between “things” and “processes”.

“Things” are deemed to have a static instantiation (whether physical or digital). Things are deemed to be composed of other things: systems have different system components, physical mechanic devices have different parts, and chemical compositions have different molecular and/or atomic constituents.

“Processes” are a set of events that unfold in time, typically sequentially. They are often methods that involve a set of steps. Each step may be seen as a different action and/or configured state of things and matter.

When we are looking at claim “features”, we are looking to segment the text of the claim into sub-portions that we can consider individually. Psychologically, we are looking to “chunk” the content of the claim. We chunk because our working memories are limited. When comparing we need to hold one chunk in the working memory, and compare it with one or more other chunks. Our brains can hold a sequence of about three or four “chunks” in working memory at any one time, or hold two items for comparison. We decompose the claim into features as a way to work out if a match exists – we can say the whole matches if each of the parts match.

Now, we only need to break a claim into features because it is complex. If the claim was “1. A bicycle.”, we could likely hold the whole claim in our working memories and compare it with other entities. In this case, we might need to use the previous step of claim construction to determine what the minimum properties of a “bicycle” were. (Two wheels? Is a tricycle, a motorcycle, or a unicycle a “bicycle”?). Here we see that the definition of claim features can be a recursive process, where the depth of recursion into both explicit and implicit features depends on the level of disagreement between parties (and likelihood of collective agreement between different parties within an authority, such as between a primary examiner and senior examiner). Recursion can also be used to “zoom in” on a particular feature comparison, while then concluding on a match at a “zoomed out” level of the feature (e.g., this does match a bicycle because X is a first wheel and Y is a second wheel).

A Short Aside on Segmentation

Claim feature extraction is a form of semantic segmentation.

Segmentation in images made a huge leap in 2023 with the launch of Meta’s Segment Anything model. In images, segmentation is often an act of determining a context-dependent pixel boundary in two-dimensions.

For the sequence of characters that form a patent claim, we have a one-dimensional problem. We need to determine the “feature” breakpoints in the sequence of characters.

It turns out patent attorneys provide clues as to this semantic segmentation via the use of whitespace. Patent attorneys will often add whitespace such that the claim is partitioned into pseudo-features by way of the two-dimensional lay out.

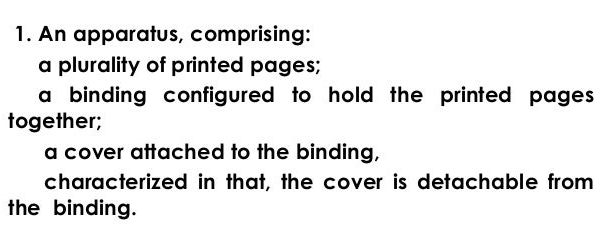

In the example above we see that commas, semi-colons, and new lines break the patent claim into five natural “features”.

It turns out there are a number of problems with the reliability of automated segmentation based on whitespace:

- The text is often transformed when it is loaded into different systems, meaning that original white space may be lost or omitted. Fairly often new lines are stripped out, or stripped out then manually replaced.

- There are many different encodings of many different forms of whitespace – they are multiple versions of the new line character for example.

- Real-world patent claims often have a multi-tier nested structure that requires more advanced recursive segmentation.

Things or Events as Features

Those familiar with patent law will realise that when someone refers to “claim features”, they are normally referring to portions of text within the claim that are indicated as separate sections by the author’s use of whitespace. Claim charts are tables that often have 5-10 rows, where each row is a feature that is a different portion of the claim text determined in this way. Claim charts normally are structured to fill up one page of A4, so we can easily get an idea of the feature matches.

However, we can ask a deeper question – what are those different whitespace separately portions of the claim actually representing?

Or put another way – what do we mean by semantic segmentation of the claim text?

Let’s have a look at the simple WIPO claim example above. Using new lines we can split that into the following features:

- [a]n apparatus (, comprising:)

- a plurality of printed pages;

- a binding configured to hold the printed pages together;

- a cover attached to the binding,

- characterized in that, the cover is detachable from the binding.

Looking closely, we see that actually those text portions are centred on different things. The claim defines an “apparatus“, that forms the top line. This apparatus has a number of components: pages, a binding, and a cover. We see that the middle three segments are based around definitions of each of these components. The last section then defines a characteristic of the apparatus in terms of the cover and binding components.

So for a “thing” claim, we see that our semantic focus for segmentation is “sub-things”. “Things” are made of interconnected “sub-things” and this pattern repeats recursively. We can look at different “things” or “sub-things” in isolation of its connections to focus on it’s individual properties. Things at each level are defined by the interconnection and inter-configuration of sub-things at a lower level.

Now in English grammar, we have a term for “things”: nouns. Nouns and noun-phrases are the terms we use to classify the location of “things” in text. So when we semantically segment a claim, we are doing this based on the noun content of the claim.

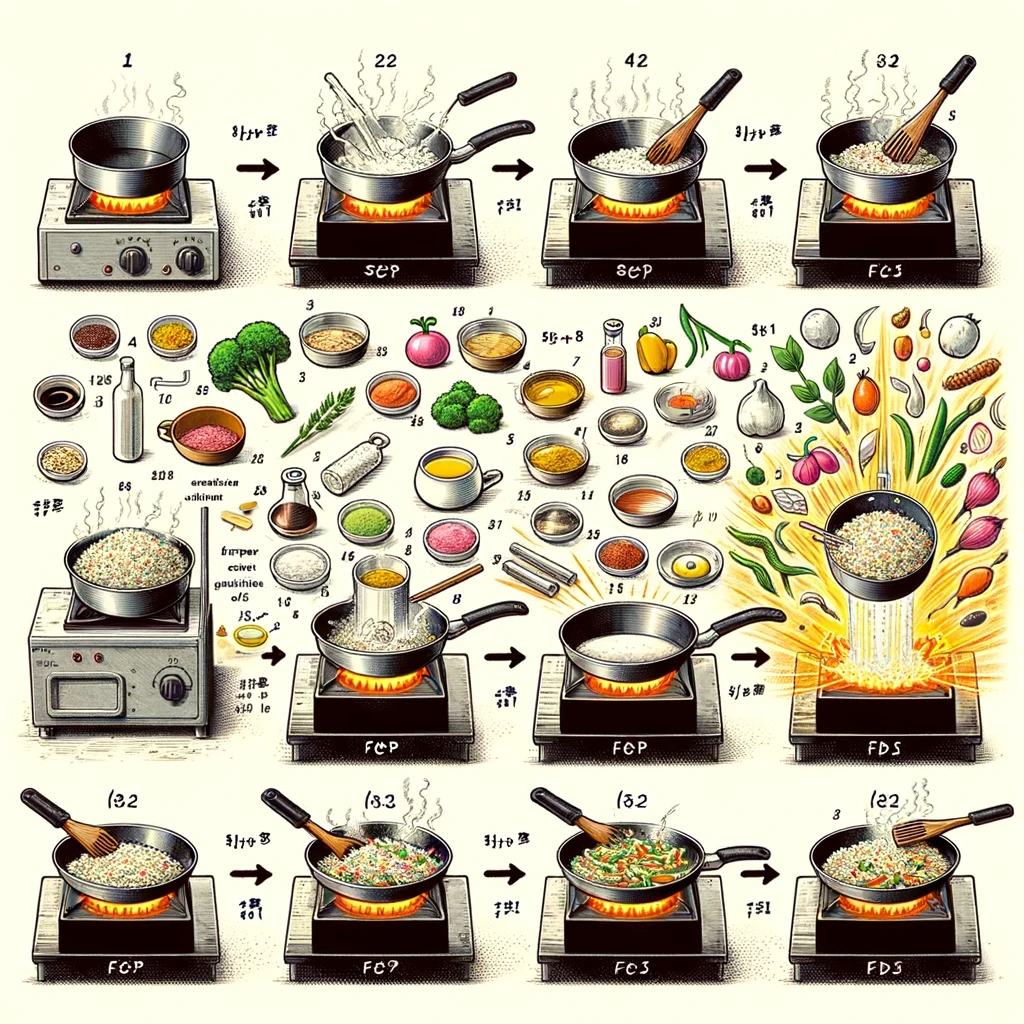

Method claims are slightly different. We no longer have a subdivision by static structural “things”. Rather we have a partition by time, or more precisely different sequences of actions within time. Take another claim example from WIPO:

If you were to ask a patent attorney to split that claim into “features”, they would likely choose each step – i.e. each clause starting on a new line and ending with a semi-colon and new line:

- [a] process for producing fried rice (, comprising the steps of:)

- turning the heat source on;

- cooking rice in water over the heat source for a predetermined period;

- placing a predetermined amount of oil in a pan;

- cooking other ingredients and seasoning in the pan over the heat source;

- placing the cooked rice in the pan; (and)

- stirring consistently the rice and the other ingredients for a predetermined length of time over the heat source.

These steps are different actions in time, where time runs sequentially across the steps.

Now we can see that method claims also share certain aspects of the “thing” claims. We have several “things” that are acted on in the method, including: “fried rice”, “heat source”, “rice”, “oil”, “pan”, “ingredients”, “seasoning”, “cooked rice”, and “length of time”. We can also see that some of those “things” are actually different states of the same thing – we start with “rice”, which then becomes “cooked rice”, which is output by the method as “fried rice”.

Even though a method consisting of: “turning”, “cooking”, “placing”, “cooking”, “placing”, and “stirring” would be a valid patent claim, it would likely lack novelty. For example, the quite different method of cooking a chicken dinner below would fall within that method:

- turning a chicken breast in flour;

- cooking a set of potatoes in water;

- placing the chicken breast and cooked potatoes on baking trays;

- cooking the chicken breast and potatoes in the oven;

- placing the cooked chicken breast and potatoes on a plate; and

- stirring gravy to pour over the plate.

So we see that it is the things that are involved in each step that define the (sub) features of the step.

Match Features

Once we have identified features in the claim the next step is comparing each of those features. For infringement, we are comparing with a possibly infringing thing or process. For examination, we are comparing with a prior publication.

Splitting a claim into features lessens the cognitive load of the comparison. It also allows agreement on uncontentious aspects, focusing effort on key points of disagreement. Much of the time, there is only really one feature that may or may not differ. Often one missing feature is all you need to avoid infringement and/or argue for an inventive step.

Now, you might say that matching is easy, just like spot the difference.

Going back to an image analogy, visual features may be segmented portions of a two-dimensional extent. In spot the difference we compare two images that are scaled to the same dimensions. We are then looking for some form of visual equivalence in the pixel patterns in different portions of the image.

Words are harder though. We are dealing with at least one level of abstraction from physical reality. We are looking for a socially agreed correspondence between two sets of words.

The facts of the case determine what features will be in contention and which may be more easily matched. Different features will be relevant for different comparisons. Inventive step considerations still involve a feature matching exercise, but they involve different feature matches in different portions of prior art.

What does it mean for a feature to match?

We have our claim feature, which is set out in a portion of the claim text (our segmented portion).

Our first challenge is to identify what we are comparing with the claim. These can sometimes be fuzzy-edged items that need to be human-defined. Sometimes they are harder-edged and more unanimously agreed upon as “things” to compare. For infringement, the comparison may be based on a written description of a defined product, or a documented procedure. For examination, it is often a prior-published patent application.

Our second challenge is to find something in the comparison item that is relevant to the particular feature. There may be multiple candidates for a match. At an early stage this might be a general component of a product or thing, or a particular component of a particular embodiment of a patent application as set out in one or more figures.

Once we have something to compare, and have identified a rough candidate correspondence, the detailed analysis of the match then depends on whether we are looking at infringement or examination for novelty.

For infringement, we have a “match” if the language of the claim feature can be said to describe a corresponding feature in the potentially infringing product or process. At this stage we can ignore the nuances of the infringement type (e.g., use vs sale), as this normally only follows if we have a clear infringing product or process. To be more precise, we have a “match” if a legal authority agrees that the language of the claim feature covers a corresponding feature in the potentially infringing product or process. So there is also a social aspect.

For the examination of novelty, we have a “match” if a portion of a written description can be said to describe all the aspects of the claim feature. As claim features are typically at a higher level of abstraction, this can also be thought of as: would an abstracted version of the written description produce a summary that is identical to the claim feature?

A match is not necessarily boolean; if there is a particular point of interpretation or ambiguity there may be numerous options to decide. A decision is made based on reason (or reasons), sometimes with an appeal to previous cases (case law) or analogy or even public policy. If you asked 100 people, you might get X deciding one way and 100-X deciding the other.

Look at the number of matched features

This is normally the easy part. If we have iterated through our “matching” for each identified claim feature, and the set of claim features exhaustively cover all of the claim text, then we simply total up the number of deemed “matches”.

If all the features match, we have a matching product or process for infringement, or our claim is anticipated by the prior art.

If any feature does not match, then we do not have infringement (ignoring for now legal “in-filling” possibilities) and our claim has novelty, with the non-matching features being the “novel” features of the claim.

Any non-matching features may then be subject to a further analysis on the grounds of inventive step. If the non-matching feature is clearly found in another document, and a skilled person would seek out that other document and combine teachings with no effort, then the non-matching feature is said to lack an inventive step.

Can we automate?

Given the above, we can ask the valid question: can we automate the process?

The answer used to be “no”. The best we could do was to compare strings, and we’ve seen above that any different in surface form of the string (including synonyms or differences in spelling or white space) would throw out an analysis of even single words.

Fuzzy matching

Before 2010, natural language processing (NLP) engineers tried tinkering with a number of approaches to match words. This normally fell within the area of “fuzzy matching”. An approach used since the 60s is calculating the Levenshtein distance, a measure of the minimum number of single-character edits that change one word into another. This could catch small typos but still needed a rough string match. It failed with synonyms and irregular verbs.

word2vec

In the early 2010s though, things began to change. Techniques such as word2vec were developed. This allowed researches to replace a string version of a word with a list of floating point numbers. These numbers represented latent properties of use of the string in a large corpus of documents. Or put another way, we could compare words using numbers.

Early word vector approaches offered the possibility of comparing words with the same meaning but different string patterns. In particular, we found that the vectors representing the words had some cool geometric properties – as we moved within the high-dimensional vector space we saw natural transitions of meaning. Words with similar meanings (i.e., that were used in similar ways in large corpora) had vectors that were nearby in vector space.

So words such as “server” and “computer” might be matched in a claim based on their word2vec vectors despite there being no string match. I remember playing with this using the gensim library.

Transformers

We didn’t know it at the time, but word vectors were the beginning not the end of NLP magic.

In the early days, word embeddings allowed us to create numerical representations of word tokens for input into more complex neural network architectures. At first you could generate embeddings for a dictionary of 100,000 words, giving you a matrix of vector-size x 100k and you could then select your inputs based on a classic whitespace tokenisation of the text.

Quickly, people realised that actually you didn’t need the word2vec as a separate process, but you could learn that matrix of embeddings as part of your neural architecture. Sequence to sequence models were built on top of recurrent neural network architectures. Then in 2017, Attention is All You Need came along, which turbo-charged the transformer revolution. Fairly quickly in 2018 we arrived at BERT, which was the encoder side of AIAYN and was built into many NLP pipelines as a magical classifying workhorse, and GPT, the foundation model that became the infamous ChatGPT. In 2023, we saw the public release of GPT4, which took language models from an interesting toy for making you sound like a pirate to possible production language computer. In 2024, we are still struggling to get anywhere near to the abilities of GPT4.

With large language models like BERT and GPT, you get embeddings of any text “for free” – it’s a first stage of the model. We can thus now embed longer strings of text and convert it into a vector representation. These vectors can then be compared using mathematics. STEM – 1 ; humanities – 0 (just don’t take a close look at society).

Settlers in a Brave New World

The power of word embeddings and large language models now open up whole new avenues of “legal word processing” that were previously unimaginable. We’ve touched on using retrieval augmented generation here and here. We can apply the same approaches to patent documents and claims.

We now have a form of computer that takes a text input and produces a text output. We don’t quite know how it works but it seems to pass the Turing Test, while reasoning in a slightly stunted and alien manner.

This then provides the opportunity to automate the process described above, to arrive at automated infringement and novelty opinions. At scale. While we sleep. For pennies.

I’m excited.

Linda Farrow talked about how her innovative architecture and design practice,

Linda Farrow talked about how her innovative architecture and design practice,  Linda was followed by Griff Holland of

Linda was followed by Griff Holland of  The case studies were followed by a presentation by Amy Robinson, Network Director of

The case studies were followed by a presentation by Amy Robinson, Network Director of  BathSpark is a new event that brings together the people of Bath who work in technology. Its stipulated aims are “to create opportunity, encourage diversity and spark new ventures”. It is described as “social networking (for real) with local digital people enjoying a drink, and talking about what they love”. It grew out of previous informal tech people meet ups under the organising eye of The Filter’s CEO, David Maher Roberts.

BathSpark is a new event that brings together the people of Bath who work in technology. Its stipulated aims are “to create opportunity, encourage diversity and spark new ventures”. It is described as “social networking (for real) with local digital people enjoying a drink, and talking about what they love”. It grew out of previous informal tech people meet ups under the organising eye of The Filter’s CEO, David Maher Roberts.