When working with neural network architectures we need good datasets for training. The problem is good datasets are rare. In this post I sketch out some ideas for building a dataset of smaller, linked portions of a patent specification. This dataset can be useful for training natural language processing models.

What are we doing?

We want to build some neural network models that draft patent specification text automatically.

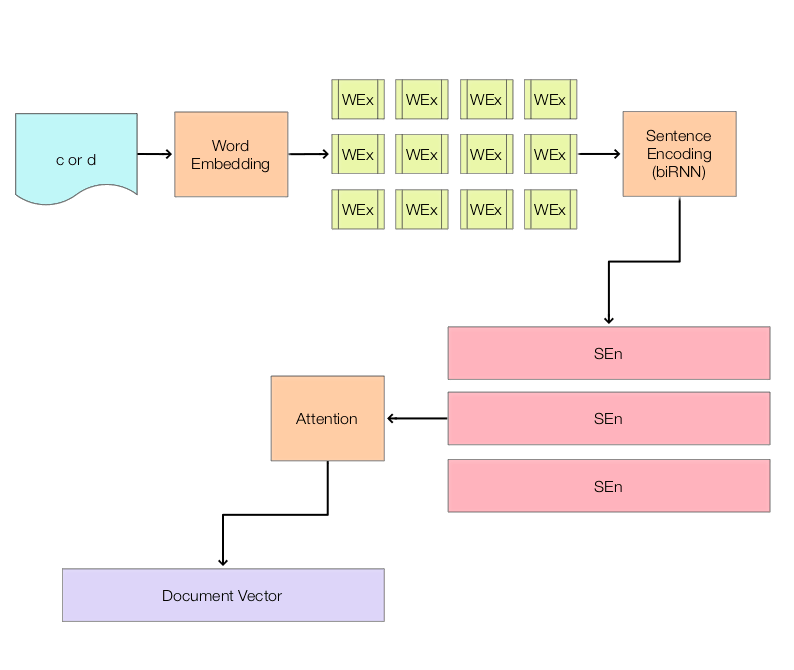

In the field of natural language processing, neural network architectures have shown limited success in creating captions for images (kicked off by this paper) and text generation for dialogue (see here). The question is: can we get similar architectures to work on real-world data sources, such as the huge database of patent publications?

How do you draft a patent specification?

As a patent attorney, I often draft patent specifications as follows:

- Review invention disclosure.

- Draft independent patent claims.

- Draft dependent patent claims.

- Draft patent figures.

- Draft patent technical field and background.

- Draft patent detailed description.

- Draft abstract.

The invention disclosure may be supplied as a short text document, an academic paper, or a proposed standards specification. The main job of a patent attorney is to convert this into a set of patent claims that have broad coverage and are difficult to work around. The coverage may be limited by pre-existing published documents. These may be previous patent applications (e.g. filed by a company or its competitors), cited academic papers or published technical specifications.

Where is the data?

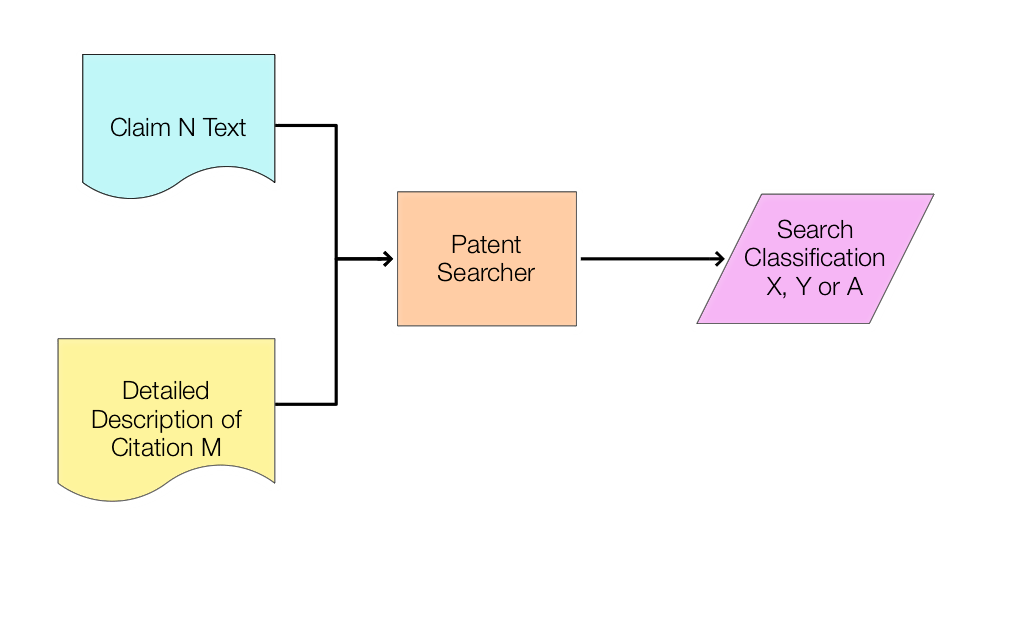

As many have commented, when working with neural networks we often need to frame our problem as map X to Y, where the neural network learns the mapping when presented with many examples. In the patent world, what can we use as our Xs and Ys?

- If you work in a large company you may have access to internal reports and invention disclosures. However, these are rarely made public.

- To obtain a patent, you need to publish the patent specification. This means we have multiple databases of millions of documents. This is a good source of training data.

- Standards submissions and academic papers are also published. The problem is there is no structured dataset that explicitly links documents to patent specifications. The best we can do is a fuzzy match using inventor details and subject matter. However, this would likely be noisy and require cleaning by hand.

- US provisional applications are occasionally made up of a “rough and ready” pre-filing document. These may be available as priority documents on later-filed patent applications. The problem here is that a human being would need to inspect each candidate case individually.

Claim > Figure > Description

At present, the research models and datasets have small amounts of text data. The COCO image database has one-sentence annotations for a range of pictures. Dialogue systems often use tweet or text-message length text segments (i.e. 140-280 characters). A patent specification in comparison is monstrous (around 20-100 pages). Similarly there may be 3 to 30 patent figures. Claims are better – these tend to be around 150 words (but can be pages).

To experiment with a self-drafting system, it would be nice to have a dataset with examples as follows:

- Independent claim: one independent claim of one predefined category (e.g. system or method) with a word limit.

- Figure: one figure that shows mainly the features of the independent claim.

- Description: a handful of paragraphs (e.g. 1-5) that describe the Figure.

We could then play around with architectures to perform the following mappings:

- Independent claim > Figure (i.e. task 4 above).

- Independent claim + Figure > Description (i.e. task 7 above).

One problem is this dataset does not naturally exist.

Another problem is that ideally we would like at least 10,000 examples. If you spent an hour collating each example, and did this for three hours a day, it would take you nearly a decade. (You may or may not also be world class in example collation.)

The long way

Because of the problems above it looks like we will need to automate the building of this dataset ourselves. How can we do this?

If I was to do this manually, I would:

- Get a list of patent applications in a field I know (e.g. G06).

- Choose a category – maybe start with apparatus/system.

- Get the PDF of the patent application.

- Look at the claims – extract an independent claim of the chosen category. Paste this into a spreadsheet.

- Look at the Figures. Find the Figure that illustrated most of the claim features. Save this in a directory with a sensible name (e.g. linked to the claim).

- Look at the detailed description. Copy and paste the passages that mention the Figure (e.g. all those paragraphs that describe the features in Figure X). This is often a continuous range.

The shorter way

There may be a way we can cheat a little. However, this might only work for granted European patents.

One bug-bear enjoyable part of being a European patent attorney is adding reference numerals to the claims to comply with Rule 43(7) EPC. Now where else can you find reference numerals? Why, in the Figures and in the claims. Huzzah! A correlation.

So a rough plan for an algorithm would be as follows:

- Get a list of granted EP patents (this could comprise a search output).

- Define a claim category (e.g. based a string pattern – [“apparatus”, “system”]).

- For each patent in the list:

- Fetch the claims using the EPO OPS “Fulltext Retrieval” API.

- Process the claims to locate the lowest number independent claim of the defined claim category (my PatentData Python library has some tools to do this).

- If a match is found:

- Save the claim.

- Extract reference numerals from the claim (this could be achieved by looking for text in parenthesis or using a “NUM” part of speech from spaCy).

- Fetch the description text using the EPO OPS “Fulltext Retrieval” API.

- Extract paragraphs from the description that contain the extracted reference numerals (likely with some threshold – e.g. consecutive paragraphs with greater than 2 or 3 inclusions).

- Save the paragraphs and the claim, together with an identifier (e.g. the published patent number).

- Determine a candidate Figure number from the extracted paragraphs (e.g. by looking for “FIG* [/d]”).

- Fetch that Figure using the EPO OPS “Drawings” or images retrieval API.

- Now we can’t retrieve specific Figures, only specific sheets of drawings, and only in ~50% of cases will these match.

- We can either:

- Retrieve all the Figures and then OCR these looking for a match with the Figure number and/or the reference numbers.

- Start with a sheet equal to the Figure number, OCR, then if there is no match, iterate up and down the Figures until a match is found.

- See if we can retrieve a mosaic featuring all the Figures, OCR that and look for the sheet number preceding a Figure or reference numeral match.

- Save the Figure as something loadable (TIFF format is standard) with a name equal to the previous identifier.

The output from running this would be triple similar to this: (claim_text, paragraph_list, figure_file_path).

We might want some way to clean any results – or at least view them easily so that a “gold standard” dataset can be built. This would lend itself to a Mechanical Turk exercise.

We could break down the text data further – the claim text into clauses or “features” (e.g. based on semi-colon placement) and the paragraphs into clauses or sentences.

The image data is black and white, so we could resize and resave each TIFF file as a binary matrix of a common size. We could also use any OCR data from the file.

What do we need to do?

We need to code up a script to run the algorithm above. If we are downloading large chunks of text and images we need to be careful of exceeding the EPO’s terms of use limits. We may need to code up some throttling and download monitoring. We might also want to carefully cache our requests, so that we don’t download the same data twice.

Initially we could start with a smaller dataset of say 10 or 100 examples. Get that working. Then scale out to many more.

If the EPO OPS is too slow or our downloads are too large, we could use (i.e. buy access to) a bulk data collection. We might want to design our algorithm so that the processing may be performed independently of how the data is obtained.

Another Option

Another option is that front page images of patent publications are often available. The Figure published with the abstract is often that which the patent examiner or patent drafter thinks best illustrates the invention. We could try to match this with an independent claim. The figure image supplied though is smaller. This maybe a backup option if our main plan fails.

Wrapping Up

So. We now have a plan for building a dataset of claim text, description text and patent drawings. If the text data is broken down into clauses or sentences, this would not be a million miles away from the COCO dataset, but for patents. This would be a great resource for experimenting with self-drafting systems.